The Big Players in Generative Engine Optimization (GEO): How Each Answers Queries

Let’s explore the inner workings of ChatGPT, Google Gemini, Microsoft Copilot, and Perplexity

The rise of Large Language Models (LLMs) has introduced an entirely new approach to finding information. Instead of the familiar list of ten blue links, web users can now get direct answers from AI.

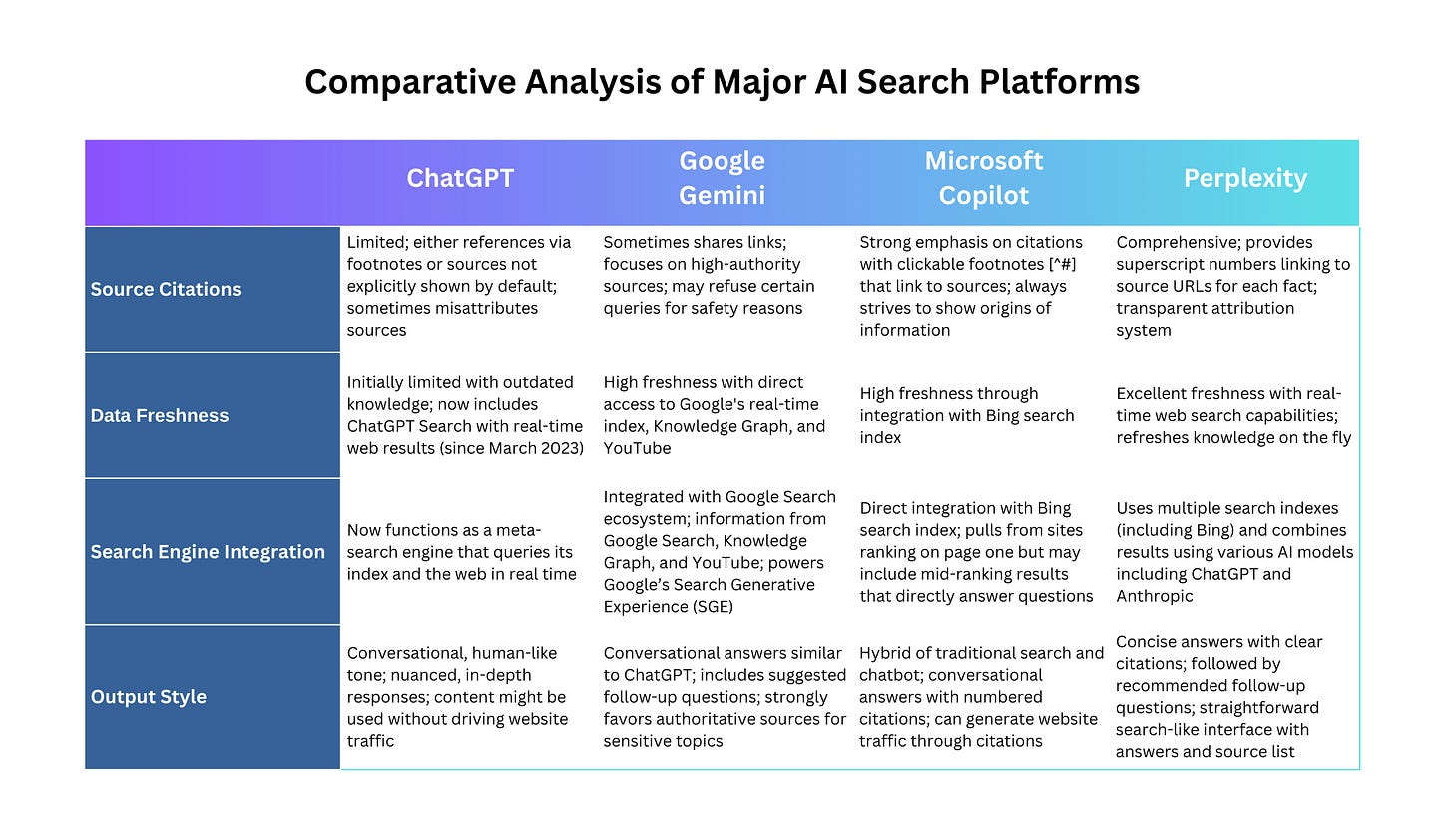

In this article, we’ll explore the major AI search players including OpenAI’s ChatGPT, Google Gemini, Microsoft Copilot, and Perplexity while examining how each generates responses and prioritizes information. We’ll highlight key differences in how they present content (e.g., ChatGPT’s conversational summaries vs. Perplexity’s cited snippets) and discuss how brands can adapt their Generative Engine Optimization (GEO) strategy for each model.

The New Players in Search: Major LLMs and AI Search Engines

Just a few years ago, Google was far and away the leading search engine. But today, millions of people are turning to AI for answers.

As of mid-2024, ChatGPT and Google Gemini together account for slightly more than three-quarters of global traffic to AI search platforms, with most of the remainder going to Perplexity AI and Microsoft Copilot. And adoption is rapidly growing. About 13 million Americans used AI as their primary search tool in 2023—a figure projected to soar to 90 million by 2027.

This anticipated growth means that marketing professionals must prepare now and start to understand the nuances of each of the four major AI providers on the market.

ChatGPT

Launched in late 2022, ChatGPT kicked off the AI search trend. It provides answers based on its trained knowledge and only recently begun to fetch live web results (as of March 2023). Previously, it wouldn’t cite sources or show links but rather combine its knowledge into a single answer, delivered in a human-like tone.

ChatGPT’s conversational approach makes it extremely user-friendly and capable of nuanced, in-depth responses. But given that it was initially walled off from the open web, some of its knowledge tended to be outdated.

OpenAI has since introduced ChatGPT Search which allows it to pull in current information. It is now essentially a meta-search engine that queries its index and the web in real time and summarizes the results. Even in this mode, the presentation of information is conversational. Sources are either referenced via footnotes or are not explicitly shown to the user by default.

ChatGPT Search isn’t flawless, however. A Columbia Journalism Review analysis found that it will misattribute or blur publisher content. In one instance, the platform attributed an Orlando Sentinel quote to a Time magazine article, illustrating that its answers can mix up sources.

For brands, ChatGPT represents a new kind of search engine. Your content might be read and reused by AI but it won’t always drive website traffic. Ensuring your core messaging is accurate and well-represented in the information ChatGPT uses is key since users won’t always be provided a link to your website when it delivers an answer.

Google Gemini

Google entered AI search with its Bard platform (later rebranded Gemini). Bard/Gemini launched in 2023 as a standalone AI chat product, similar to ChatGPT. With the rollout of Google’s Search Generative Experience (SGE), Google started presenting these AI answers at the top of search results.

Gemini is trained on Google’s vast ecosystem of information. It can tap into Google Search, Google Knowledge Graph, YouTube, and more. In practice, Gemini provides conversational answers similar to ChatGPT but with direct access to real-time information from Google’s index. It will also include suggested follow-up questions and sometimes share links.

A key difference noted by its users is that Gemini tends to stick to high-authority sources. In one study, all the content used for sensitive topics like health or finance came from highly trusted sites (universities, governments, well-known publications), whereas other platforms were more willing to draw from smaller sites.

Gemini is effectively constrained by Google’s ranking algorithms and quality guidelines that require it to prioritize credibility and safety. This means that while it can provide up-to-date answers (e.g., citing a news article from today), it often favors what it considers the most authoritative take on the topic. Google has also built in other safeguards. For example, Gemini might refuse to answer certain queries or provide general advice if asked about medical or political topics.

From a GEO perspective, content that already ranks well on Google or is deemed authoritative is more likely to be pulled into Gemini summaries. Ensuring your brand’s content meets Google’s quality standards (accurate, well-sourced, trustworthy) is ultimately critical for Gemini visibility.

Microsoft Copilot

Microsoft joined the AI party in early 2023 by integrating OpenAI’s GPT-4 model with its Bing search engine. Microsoft Copilot (previously named Bing Chat) is available directly on the Bing search site, the Microsoft Edge browser sidebar, and within other Microsoft products.

It functions as a hybrid of traditional search and chatbot. The AI answers questions in a conversational manner and displays footnotes with links to its sources. Behind the scenes, Copilot uses the Bing search index to retrieve relevant pages and then the LLM composes an answer with references.

The result for the user is an answer that feels like ChatGPT but with little numbers [^1^][^2^] that correspond to sources you can click. For example, if you ask Bing, “What are the benefits of OKR software for startups?”, it might produce a five-bullet point explanation and cite both a SaaS blog post and Gartner report via footnote. The presence of citations is a defining feature of Microsoft Copilot—it strives to always show the origins of information to increase user trust in its answers.

In terms of what content it displays, Copilot is influenced by Bing’s ranking algorithms. It often pulls from sites that rank on page one of Bing for that query. However, it will sometimes pick out specific snippets that answer the question directly, even if that information comes from a mid-ranking result.

That means backlinks and traditional SEO signals play a role but to a lesser extent. Copilot might choose a sentence from a niche forum post if it directly addresses the question, something a traditional Bing search result might not highlight.

For brands, Copilot offers the opportunity to still generate website traffic, as its citations mean users can click through to your site.

Ensuring your content is optimized for Bing (which often overlaps with Google’s SEO basics but with slightly less emphasis on backlinks) can help you secure those valuable citations in Copilot answers.

Perplexity AI

Among the new wave of AI search engines, Perplexity AI stands out as a tool explicitly designed to provide answers with source citations. It’s an independent “answer engine” that launched in 2022 and has gained popularity among researchers and professionals for its transparent approach.

Ask Perplexity a question and it will return a concise answer with superscript numbers linking to source URLs for each fact. For example, a Perplexity response might say, “Open rates for marketing emails average around 21% but can vary by industry.” Clicking the citation would then take you to the article or report where that statistic came from.

Additionally, Perplexity recommends follow-up questions that can help users dig deeper into a topic. This feature is similar to the “People also search for” section at the bottom of Google search results.

Under the hood, Perplexity performs a real-time web search (leveraging sources like Bing’s index and others) and then uses a combination of AI models—including ChatGPT and Anthropic—to synthesize an answer.

Even more, it refreshes its knowledge on the fly. This makes it ideal for questions like, “What were the latest product announcements at TechCrunch Disrupt 2025?”, where up-to-date info is required.

Another hallmark of Perplexity is its no-login-needed accessibility and straightforward usability. The interface looks like a search engine, but when you hit enter, you get a concise answer followed by a list of sources.

Perplexity’s prioritization of information is purely content-focused, meaning it will choose whichever sources directly answer the query—whether that’s a well-known site or a small blog. In fact, Perplexity has no issues elevating a lesser-known site if it has the nugget of info needed to answer the user’s query.

For marketers, this means content relevance and clarity can trump site authority on Perplexity. If your blog has the best answer to a specific question, Perplexity may pick it up even if your domain is new or low-authority. But because Perplexity does show sources, having a recognizable and trustworthy name still matters. After all, a user might be more inclined to click through to a site they’re familiar with.

How AI Search Engines Generate Answers

Understanding how the AI models we’ve explored produce results is crucial to formulating a GEO strategy. As we’ve previously covered, traditional search engines use web crawlers to index pages and algorithms to rank those pages for a query. AI search engines, on the other hand, generate an answer on the fly by analyzing content.

LLM “retrieval + generation”

Most AI search engines use a two-step process. They “retrieve” relevant information and then “generate” a synthesized answer.

The retrieval step might use a search index (Bing, Google, etc.) or a custom index (OpenAI has its own index for ChatGPT Search). The generation step is done by the AI model which reads the various retrieved texts and combines them into a coherent response.

This means that contextual relevance is king. AI isn’t just matching keywords, it’s reading entire pieces of content to see if it answers the user’s question. For example, if a user asks, “How do I implement SSO in a React app?”, AI will look for passages that describe the implementation steps. It might find an answer on a forum that contains the exact code snippet and opt to use that information because it's so precise.

Most of the time, AI prioritizes content quality and relevance to the query intent over traditional SEO signals. As we covered, Perplexity will happily quote a lesser-known site if it has a pertinent answer, whereas Google’s organic results might have buried that site due to low domain authority.

We’re not saying authority is meaningless for AI (the models have been trained on large datasets—often established search indexes—and may inherently trust well-known sources) but it’s less of a factor. In general, once AI has a pool of content to pull from, the best answer wins.

Ranking without backlinks

Backlinks and keyword density also hold less sway in AI-generated results. An LLM doesn’t know how many backlinks a page has (and, frankly, doesn’t care). It values whether the content on the page can answer the question at hand. We’re already seeing that pages with only a few or even no backlinks can surface in AI answers if they contain a clear, well-structured explanation.

A concise paragraph on a niche blog could be featured by AI over a top-ranked page that wanders off-topic or has too much fluff. From a GEO standpoint, this shifts focus toward on-page content quality and completeness. It’s a bit of a return to early search days when having the exact answer on your page was often enough to rank.

Conversational presentation

Each AI platform presents answers in a different format. ChatGPT and Gemini give long-form textual answers, often in a friendly or explanatory tone. They also rephrase and merge information from multiple sources. For example, ChatGPT might take a definition from one site and an example from another and weave them together—with no indication that two sources were used.

This means redundant content across the web will be synthesized. If your blog post says the same thing as multiple other articles, AI might just mention that point without specifically sourcing you.

On the other hand, unique insights or clearly-phrased answers stand out. If your content expresses an idea in a particularly compelling way, AI might latch onto it. Among marketing professionals, there’s already talk of “being the source” for a distinctive fact or quote so that LLMs repeat your version.

Platforms like Microsoft Copilot and Perplexity present answers with inline citations which is advantageous for content publishers. Perplexity’s model will often form a sentence lifted directly (or lightly paraphrased) from a source and put a citation right after it.

In these cases, having content that succinctly answers a common question can make you the cited source. This is reminiscent of featured snippets on Google but the difference is AI might splice together parts of sentences. Still, the takeaway is clear—bite-sized statements in your text are more likely to be used by LLMs.

Microsoft Copilot citations, appearing as footnotes, often link to the source page even if only one line was used. That means that being one of the references that Copilot pulls from can drive traffic. Ensuring that vital facts and answers in your content are near the top of the page or in a prominent, easy-to-understand format (like a bulleted list or a bolded summary) can increase the chance AI selects your text for citation.

Different strengths and focus areas

The four big AI platforms all have their own strengths which ultimately influence the type of content each one favors. ChatGPT is excellent for broad, explanatory answers or multi-step reasoning. It excels at tasks like comparing options, making creative suggestions, or summarizing a complex topic into simple terms. It might prefer content that provides a good narrative or step-by-step breakdown since it strives to assemble a helpful answer.

Google Gemini, with its integration into search, is best used for factual queries and the latest information. It will tap into Google News, forums, and videos so having content in various formats that is fresh and aligned with trending queries can be beneficial. For example, a company that quickly publishes a blog about a new regulation or technology might get picked up by Gemini.

Gemini also provides multiple perspectives (e.g., “According to Source A… However, another perspective from Source B…”). This suggests that having a unique take or differing answer could get you featured as an alternative view.

Perplexity and Copilot are often used for research-oriented queries (users who want the facts with citations). For those AI engines, content that includes concrete data, quotes from experts, or specific details is like gold. If your article titled, “Top 5 SaaS Trends for 2025” includes a statistic like “SaaS companies saw a 40% increase in AI adoption in 2024,” Perplexity might directly quote that stat with the citation.

If your competitor’s similar article has no specific numbers, AI will lean towards yours due to its richness of information. In short, content with evidence (numbers, names, dates, definitions, etc.) is favored when AI builds a fact-based answer.

AI Search Engines in Action

To illustrate these differences, consider this scenario: A user asks AI, “What are the challenges of implementing single sign-on (SSO) for a SaaS application?”

ChatGPT might produce a three-paragraph essay or multiple bullet points that mention general challenges (complexity, security concerns, user experience issues, etc.) in a conversational tone. It will only sometimes provide sources and it might not mention specific brands. If your company published a great whitepaper on this topic, ChatGPT’s answer could very well incorporate points from it without the user knowing.

Google Gemini might give a shorter answer, perhaps bulleting a few key challenges. It might say, “Challenges include integration difficulties, scaling the identity provider, and balancing security with user convenience.”

It could follow up with “Source: Acme Security Blog” as a small notation with a link to the website, especially if that content already ranks high on Google.

Microsoft Copilot might answer with bullet points and put footnote [1] after “integration difficulties” (linking to your blog) and footnote [2] after “scaling the identity provider” (linking to a Stack Overflow answer). A user who finds this answer helpful may click those footnotes to read more.

Perplexity would likely produce a concise paragraph or numbered list. In another tab, you’d see the sources it pulled each bit from—perhaps a developer forum post and an article (maybe yours) on SSO best practices. It might also recommend additional follow-up questions to ask.

From a GEO standpoint, you could be cited if you had the best line on any one aspect of the answer.