GEO vs SEO: What Optimization Tactics Still Matter for AI Search?

Large Language Models (LLMs) take a different approach than traditional search algorithms to finding and presenting information. So which SEO tactics still matter modern search marketers?

From Keywords and Links to Semantic Understanding

Traditional search engines have long used keyword matching and link-based rankings to find and order search results. When a user enters a query, the engine breaks it into keywords and retrieves pages containing those terms (or similar terms), then ranks those pages based on factors like relevance and backlinks. In this traditional search model, a page’s authority and on-page keyword usage determine its position in search results.

AI-powered search retrieves, interprets, and presents information very differently—focusing on context and meaning rather than keywords and links.

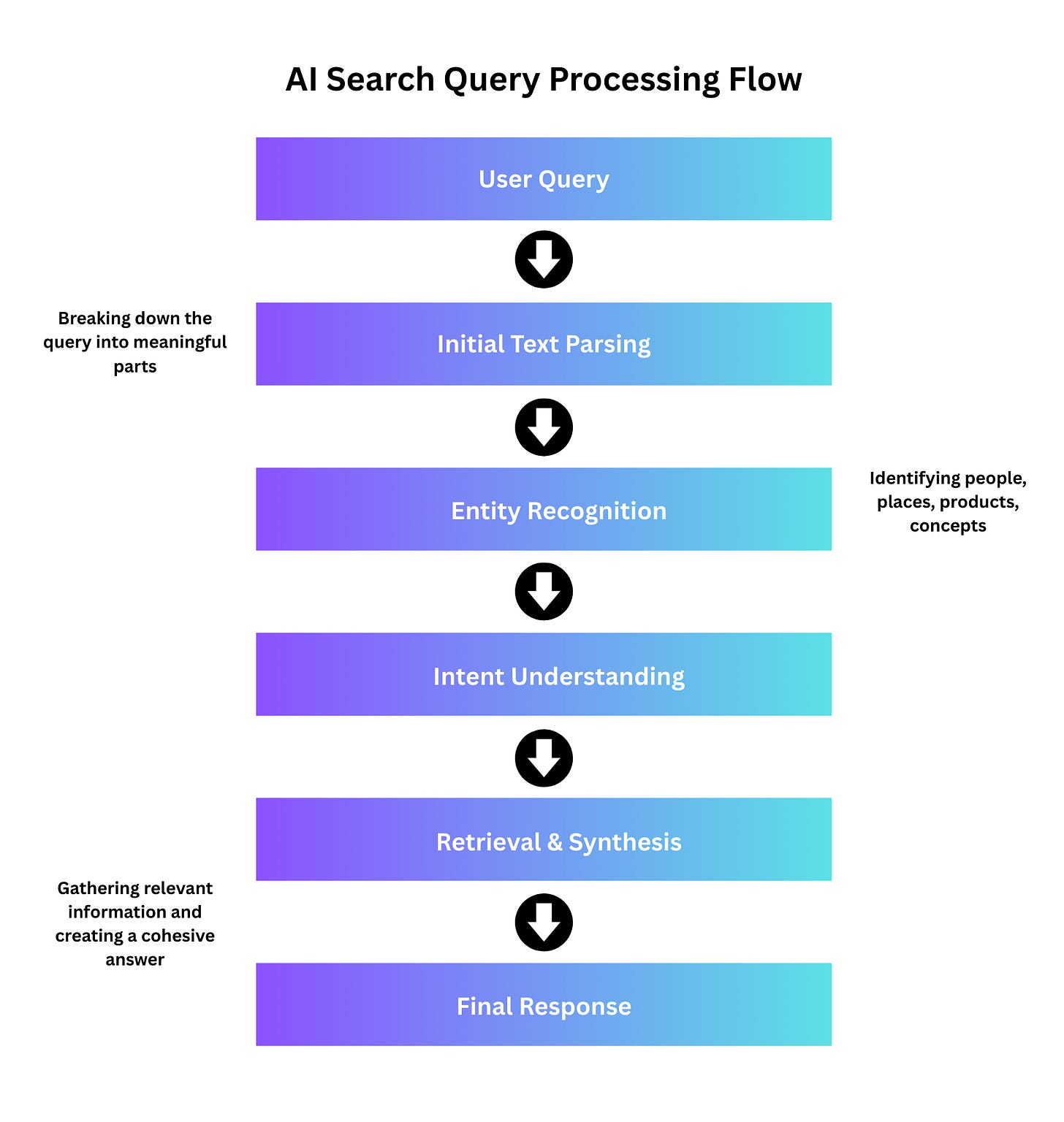

Instead of acting like a librarian searching an index, AI behaves more like a research assistant that understands the topic. It uses Natural Language Understanding (NLU) to parse queries and content. This means AI can recognize entities (people, places, products, etc.), grasp the intent behind a question, and interpret context.

For example, if a user searches for “best CRM software 2025,” the old approach looks for pages optimized for that exact phrase. That means that the software recommended to them is going to be based entirely on what the best optimized pages say.

An AI-powered search, however, will recognize that “CRM software” is the topic and might understand that specific entities like Salesforce or HubSpot are widely considered the top products on the market. It could then present contextual information about those entities (features, pricing, user reviews) from multiple sources, even if the content doesn’t use the exact query phrasing. This shift from keyword-based retrieval to entity-based, semantic search allows AI engines to provide nuanced responses that better address the user’s true intent.

Link-based ranking vs. contextual relevance

Traditional search relies on algorithms like Google’s PageRank. It uses the number and quality of backlinks to determine what pages are authoritative and rank them accordingly.

AI platforms rely less on a link-driven approach. Instead, an AI component of a search engine—such as Google Search Generative Experience (SGE) or Microsoft Copilot in Bing— generates a single answer compiled from various sources. In doing so, AI prioritizes content that is contextually relevant, accurate, and comprehensive over content that merely has a high link count.

A recent analysis illustrates this stark difference. Sources cited in Google SGE overlapped with the top 10 organic search results only about 4.5% of the time. This is because AI values information richness and contextual fit and is willing to go beyond page one to answer a user’s query.

AI retrieval techniques (RAG and knowledge integration)

AI search often uses Retrieval-Augmented Generation (RAG) to gather the latest available information. With RAG, AI first uses a traditional search index to fetch relevant content or data points based on the user’s query. It then feeds that information into the language model as context for answer generation. This approach helps AI provide up-to-date responses and reduces the chance of hallucinations (made-up facts).

For example, Microsoft Copilot will perform a web search in real time, retrieve relevant results (e.g., news articles, forum posts, Wikipedia entries), and then compose an answer using that information. The underlying idea is that while the AI model has learned vast knowledge from training data, it can be augmented with the latest information—combining the strengths of search engines and generative AI.

In addition to pulling in information from around the web, some AI systems integrate with knowledge graphs to improve understanding and accuracy. A knowledge graph is a structured database of factual information (think of Google’s Knowledge Graph that interconnects people, places, companies, etc., based on their relationships). By tapping into these graphs, an AI system can retrieve trusted facts and contextual relationships that aren’t directly stated in any single web source.

For instance, if you ask a traditional search engine a complex question like, “How does the new EU data privacy law affect small e-commerce businesses?”, it would likely return several articles about the law, ranked by authority, that are somewhat (but not completely) e-commerce focused.

AI search, on the other hand, would recognize entities in the query and use a knowledge graph to understand the context. “EU data privacy law” likely refers to GDPR and “small e-commerce businesses” is a specific business category.

It could then retrieve specific provisions from the law text via a document search, cross-reference guidance from a government FAQ site, and check common impacts on small e-commerce businesses from relevant sources. The AI response would be a synthesized explanation with multiple sources cited that gives a point-by-point answer to the user’s question.

Let’s look at another example that highlights the difference between traditional and AI search. An exercise conducted by the digital marketing agency Wray Ward found that a blog article from a building materials company once held the featured snippet for the query “STC rating wall assembly” on Google.

In the AI-powered SGE result for that same query, Google produced a multi-part answer. It defined STC (using that blog and a ScienceDirect entry as sources) and then listed ways to improve a wall’s STC rating, all within the AI answer box at the top of the search results. The original blog was still cited (it appeared as the first source link) but Gemini provided additional context from other sources. This demonstrates that an AI search doesn’t just pick one “best” page—it delivers a complete answer by merging information.

For marketing professionals, this means the playing field is somewhat leveled by AI—but also less predictable. It’s no longer enough to simply be the most authoritative site on a topic. Your content also needs to provide the specific context or answers AI is likely to use.

What Traditional SEO Tactics No Longer Matter?

The rise of AI search is rewriting the rules of what optimizations actually drive visibility. Many traditional SEO tactics lose effectiveness when users favor AI platforms over search engines—and when the search engines themselves are presenting AI-generated results. Let’s explore some formerly tried-and-true SEO tactics that matter far less for Generative Engine Optimization (GEO).

Backlink quantity as a standalone metric

In classic SEO, a large number of backlinks pointing to your page is a strong signal of authority (as we’ve covered, links are the essence of Google’s PageRank algorithm). For years, SEO professionals have come up with thoughtful ways to amass as many inbound links as possible.

However, AI cares far more about what a page says than how many other sites link to it. When an AI model is generating an answer, it doesn’t see the backlink profile of a page—it sees the content. A page with ten thousand backlinks but thin content might rank well on a traditional SERP but won’t be included in an AI answer if it doesn’t provide novel information or context.

Let’s consider the example of a high-authority travel blog with thousands of backlinks to its post optimized for the term “best beaches in Maui.” In reality, this blog probably exists to route traffic to its affiliate links and digital ads, not necessarily to help travelers plan their vacations. But because the site is so well optimized, it shows up on SERPs when people are researching Maui beaches.

In the modern era of GEO, AI might not be inclined to cite this popular content. Instead, it might prefer a more detailed post on Maui beaches because of its contextual value, even if that site has only a few inbound links.

Exact-match keywords and keyword density

Old-school SEO professionals tend to obsess over placing exact query keywords in titles, headings, and multiple times in the text. Some practitioners even track keyword density as a metric.

Modern search algorithms—even before AI—have moved away from exact-match requirements. Thanks to advancements like Google’s Hummingbird and RankBrain, search algorithms have gotten better at understanding synonyms and intent.

But with the emergence of AI search, keyword stuffing has been rendered completely obsolete. Large Language Models (LLMs) interpret queries for meaning and will find an answer in text whether or not it matches the user’s precise wording.

What matters is semantic relevance. With the query, “How to fix a leaking faucet,” AI can draw from an article titled, “DIY Guide: Repairing a Dripping Tap” because it understands “dripping tap” is equivalent to “leaking faucet.” Having an exact match title offers no special advantage in an AI context..

That means SEO tactics like creating separate pages that target minor keyword variations (e.g., one page for “best running shoes” and another for “best jogging shoes”) are no longer effective. AI sees through the wording to the core intent.

Traditional PageRank factors (anchor text, link juice, etc.)

Other PageRank-focused tactics revolve around optimizing anchor text (the clickable text of a link) and sculpting internal link flow (links between pages on your site). For instance, having keyword-rich anchor text pointing to an important page was believed to signal relevance for that keyword and boost the page’s authority.

These factors are less important in GEO. An AI answer might cite a source but it doesn’t care what pages on your sites link to it or what the anchor text says.

It cares about the content on the page itself. Techniques like acquiring exact-match anchor text backlinks or using internal link structures to concentrate the algorithm on a specific page have little to no impact on whether your content gets used in an AI answer.

High domain authority trumping relevance

In the past, having a high-authority website (one with a strong backlink profile and long-standing trust) could propel even mediocre content to rank well. Smaller or newer sites have long struggled to outrank “the big guys” due to this authority gap.

AI search is changing that dynamic. Since an AI model selects text from across the web, it can choose a paragraph from a new startup’s blog over a Fortune 500 company’s site, if that paragraph answers the question effectively. As we’ve already covered, even Google SGE is willing to cite sources that aren’t top-ranking domains.

For example, a well-known medical site might lose out on an AI answer for a specific health query if a lesser-known specialist’s blog provides a clearer explanation. The high-authority site will still be in the LLMs dataset but AI might decide the specialist’s content was a better source to present to the user.

This is already translating into traffic changes on the web. One study estimates that websites that dominate traditional SERPs could experience a decrease in organic traffic ranging anywhere from 18% to 64% due to Google SGE.

This is simply because users can get their answers from the AI summary at the top of the page without needing to explore any high-ranking sites. The takeaway? Domain prestige is no longer a free pass to search visibility.

Exact match domains and minor technical tricks

Legacy tactics like buying exact-match domain names (e.g., carinsurancequotes.com for “car insurance quotes” queries) or adding multiple tags and irrelevant keywords on a page have been declining in effectiveness for years. And now with AI, they matter even less.

Similarly, subtle on-page SEO tricks like hiding extra keywords in the HTML have zero effect on GEO. AI models can’t be fooled by hidden text or code-level tweaks. They are effectively reading the page as a human would. If anything, such tricks could be counterproductive if they make content less readable or trustworthy.

To recap, AI search moves the focus away from the signals about content (links, keywords, URLs, headers, etc.) to the content itself. Traditional SEO tactics that aim to game the ranking algorithm without improving actual content quality are either neutralized or greatly devalued in GEO.

As a result, marketing professionals should deprioritize things like mass link-building campaigns, exact-match keyword targeting, and other old ranking hacks. Instead, their strategies must evolve to align with what AI is looking for—informational value and clarity.

What Traditional SEO Tactics Still Matter?

While many longstanding SEO tactics are now irrelevant, others will continue to play a role in the era of GEO. In fact, many core principles are even more important now because they help AI understand and trust your content.

AI platforms may use new methods to respond to queries but they still value high-quality information. Let’s explore the traditional SEO tactics that marketing teams should continue to use moving forward.

Content depth and comprehensiveness

AI loves in-depth, well-structured content. Since it will often cherry-pick snippets from different parts of a page to form an answer, completely covering a topic in one place increases your chances of being used.

If your content thoroughly answers not only the main question but also related follow-up questions, AI might use your page for multiple aspects of a response. High-quality, long-form content that is logically organized (with clear sections, descriptive headings, and summary boxes) provides more fodder for AI to work with.

That means that quality beats quantity of content. A single authoritative page on a topic is more valuable than five thin pages targeting slight keyword variations. Google’s EEAT guidelines (Experience, Expertise, Authoritativeness, and Trustworthiness) still apply, meaning that content that demonstrates knowledge will be favored by AI models.

A good practice is to audit your content. Does it fully answer the who/what/when/where/why/how of the topic? Are there gaps a user (or AI) might need to fill from elsewhere? By beefing up content to be truly comprehensive, you increase its usefulness to an AI engine.

Clarity, structure, and readability

The way you structure your content matters more than ever because AI models prefer content that’s easy to parse. Using clear headings and subheadings (H2s and H3s), bullet points or numbered lists, and tables for data allows AI to identify relevant information more easily.

For example, if a page contains a neatly formatted table comparing different products, AI might use it to summarize what each one offers. Likewise, an FAQ section or a summary box at the end of a blog post can be used to concisely answer a specific question.

Ensuring your content is well-organized and skimmable benefits not only human readers but also AI algorithms. You should also use proper grammar and straightforward language, as AI is effectively “reading” your text and needs to understand what you’re trying to convey.

Think of it this way. To earn a spot in an AI-generated answer, your content has to successfully teach the AI something.

Factual accuracy and evidence

AI systems are trained to avoid incorrect information and often cross-check facts across sources. As a result, content that is factually accurate, up-to-date, and supported by evidence has a higher chance of being trusted by AI.

That means it’s worth including statistics, dates, names, and references in your content—and ensuring each one is 100% correct.

Accurate information is really an area where you can be valuable to AI. If your content provides concrete evidence—like results of a study or an official datapoint—it becomes attractive for an AI answer.

The reasoning is that such content comes across as more authoritative and specific. Imagine two articles about the benefits of solar panels. One says, “Solar energy can reduce electricity bills significantly,” and another says, “Solar energy can reduce electricity bills by 50% on average in the first year” (along with a source for that figure). An AI summarizer will gravitate to the second statement because it’s more detailed and even provides a reference the system can verify.

Furthermore, being accurate protects your brand. While there is no concrete evidence yet, it’s logical to assume that AI models might penalize or avoid sources known to be misleading. So the traditional SEO principle of “don’t publish fluff, publish facts” still applies in the modern AI era.

Structured data and semantic markup

Structured data—or schema markup—has been an SEO best practice for years. And today, it helps both search engines and AI interpret content.

By adding schema to your pages to mark up information like products, reviews, FAQs, organizational structure, and more, you make your content machine-readable in a detailed way.

Schema allows AI systems to extract specific facts or answers. For example, marking up an FAQ section with <FAQPage> schema or a recipe with <Recipe> schema means AI can instantly recognize question-answer pairs or recipe content without having to infer it from text.

Search engines already rely on structured data to generate rich results and answer boxes, and it will likely continue to be important for AI search.

Looking ahead, content that feeds Google’s Knowledge Graph or other knowledge bases via structured data is better positioned to be used in AI answers. For instance, if your article about a historical event uses schema to tag the event date, persons involved, and locations, an AI answer to “When and where did X event happen?” can directly provide that information with confidence.

Structured data essentially provides AI with a cheat sheet to your content’s key points, improving the chance your information is included or cited. From a marketing perspective, organizations that build a content knowledge graph (linking their entities and pages via schema) help AI infer facts about their brand and provide more accurate answers to potential customers.

Brand authority and trust

While we noted that domain authority alone has little impact on GEO, overall brand recognition and trustworthiness heavily influence AI search results.

AI models are fine-tuned to prefer reputable sources in order to avoid disinformation. They often use filters or human-in-the-loop feedback that focuses them on trusted domains (e.g., government sites, established publishers, etc.), especially when answering sensitive queries.

This means that establishing your brand as an authority in your niche remains crucial. You should continue with marketing efforts that boost your credibility, like having subject-matter experts author your content, collecting positive user reviews, and generating brand mentions in recognized publications.

Technical SEO fundamentals

Even the most advanced AI can’t use your content if it can’t discover it in the first place. Crawling, indexing, site speed, mobile-friendliness, and security (HTTPS) are still foundational in GEO.

Technical SEO continues to matter because AI platforms draw largely from the same indexes of the web that were built by traditional search engines. If your site has poor crawlability (e.g., broken links, missing sitemaps, disallowed sections), your content will likely not be indexed by either search engines or AI systems.

Likewise, if your website is slow or has unreliable hosting, it might be difficult to access your content in real time which impacts the live retrieval approach LLMs use. Ensure your site is well structured (logical URL hierarchy, clean navigation) and uses proper HTML semantics.

Another aspect of technical SEO that is still important is keeping content crawlable without heavy reliance on client-side scripts. Research has found that AI systems sometimes miss JavaScript-injected content or structured data. Where possible, serve important content in raw HTML or use server-side rendering for SPAs to ensure nothing is lost.

Technical terminology aside, all the behind-the-scenes SEO work that makes your site accessible and understandable to algorithms remains vital for GEO.

User intent focus and relevance

Understanding and aligning with user intent has become a major theme of modern SEO. And it continues to be highly relevant for AI search. If your content is off-base for what users—and thus AI—are seeking for a given query, it won’t be included in responses.

Make sure each piece of content you produce has a clear intent alignment. That means knowing what questions it needs to answer and what information it needs to provide. Doing so will naturally increase its chances of being chosen by AI for a relevant query.

For instance, a page that directly answers “how to troubleshoot a Wi-Fi router” in a step-by-step manner is more likely to be used by AI for that question than a generic page about “home internet tips” where router information is buried. This ties back to content planning and structure. While broad, comprehensive content has its place, sometimes it’s worth creating a specific FAQ or how-to article.

From a high level, top-performing content in an AI world still starts with top-performing content for users. The difference is in consumption. Content is read by AI and relayed to the user, rather than the user reading it directly on your site.

That means optimizing for AI search is largely about making your content so good and so clear that AI cannot ignore it. If you can do that, both traditional search engine algorithms and AI models will recognize the value you provide to web users.